Previous posts: https://programming.dev/post/3974121 and https://programming.dev/post/3974080

Original survey link: https://forms.gle/7Bu3Tyi5fufmY8Vc8

Thanks for all the answers, here are the results for the survey in case you were wondering how you did!

Edit: People working in CS or a related field have a 9.59 avg score while the people that aren’t have a 9.61 avg.

People that have used AI image generators before got a 9.70 avg, while people that haven’t have a 9.39 avg score.

Edit 2: The data has slightly changed! Over 1,000 people have submitted results since posting this image, check the dataset to see live results. Be aware that many people saw the image and comments before submitting, so they’ve gotten spoiled on some results, which may be leading to a higher average recently: https://docs.google.com/spreadsheets/d/1MkuZG2MiGj-77PGkuCAM3Btb1_Lb4TFEx8tTZKiOoYI

One thing I’m not sure if it skews anything, but technically ai images are curated more than anything, you take a few prompts, throw it into a black box and spit out a couple, refine, throw it back in, and repeat. So I don’t know if its fair to say people are getting fooled by ai generated images rather than ai curated, which I feel like is an important distinction, these images were chosen because they look realistic

Well, it does say “AI Generated”, which is what they are.

All of the images in the survey were either generated by AI and then curated by humans, or they were generated by humans and then curated by humans.

I imagine that you could also train an AI to select which images to present to a group of test subjects. Then, you could do a survey that has AI generated images that were curated by an AI, and compare them to human generated images that were curated by an AI.

All of the images in the survey were either generated by AI and then curated by humans, or they were generated by humans and then curated by humans.

Unless they explained that to the participants, it defeats the point of the question.

When you ask if it’s “artist or AI”, you’re implying there was no artist input in the latter.

The question should have been “Did the artist use generative AI tools in this work or did they not”?

Every “AI generated” image you see online is curated like that. Yet none of them are called “artist using generative AI tools”.

But they were generated by AI. It’s a fair definition

I mean fair, I just think that kind of thing stretches the definition of “fooling people”

LLMs are never divorced from human interaction or curation. They are trained by people from the start, so personal curation seems like a weird caveat to get hung up on with this study. The AI is simply a tool that is being used by people to fool people.

To take it to another level on the artistic spectrum, you could get a talented artist to make pencil drawings to mimic oil paintings, then mix them in with actual oil paintings. Now ask a bunch of people which ones are the real oil paintings and record the results. The human interaction is what made the pencil look like an oil painting, but that doesn’t change the fact that the pencil generated drawings could fool people into thinking they were an oil painting.

AIs like the ones used in this study are artistic tools that require very little actual artistic talent to utilize, but just like any other artistic tool, they fundamentally need human interaction to operate.

But not all AI generated images can fool people the way this post suggests. In essence this study then has a huge selection bias, which just makes it unfit for drawing any kind of conclusion.

This is true. This is not a study, as I see it, it is just for fun.

Technically you’re right but the thing about AI image generators is that they make it really easy to mass-produce results. Each one I used in the survey took me only a few minutes, if that. Some images like the cat ones came out great in the first try. If someone wants to curate AI images, it takes little effort.

I think getting a good image from the AI generators is akin to people putting in effort and refining their art rather than putting a bunch of shapes on the page and calling it done

Did you not check for a correlation between profession and accuracy of guesses?

I have. Disappointingly there isn’t much difference, the people working in CS have a 9.59 avg while the people that aren’t have a 9.61 avg.

There is a difference in people that have used AI gen before. People that have got a 9.70 avg, while people that haven’t have a 9.39 avg score. I’ll update the post to add this.

deleted by creator

mean SD

No 9.40 2.27

Yes 9.74 2.30Definitely not statistically significant.

I would say so, but the sample size isn’t big enough to be sure of it.

So no. For a result to be “statistically significant” the calculated probability that it is the result of noise/randomness has to be below a given threshold. Few if any things will ever be “100% sure.”

Can we get the raw data set? / could you make it open? I have academic use for it.

Sure, but keep in mind this is a casual survey. Don’t take the results too seriously. Have fun: https://docs.google.com/spreadsheets/d/1MkuZG2MiGj-77PGkuCAM3Btb1_Lb4TFEx8tTZKiOoYI

Do give some credit if you can.

Of course! I’m going to find a way to integrate this dataset into a class I teach.

If I can be a bother, would you mind adding a tab that details which images were AI and which were not? It would make it more usable, people could recreate the values you have on Sheet1 J1;K20

Done, column B in the second sheet contains the answers (Yes are AI generated, No aren’t)

Awesome! Thanks very much.

I’d be curious to see the results broken down by image generator. For instance, how many of the Midjourney images were correctly flagged as AI generated? How does that compare to DALL-E? Are there any statistically significant differences between the different generators?

Are there any statistically significant differences between the different generators?

Every image was created by DALL-E 3 except for one. I honestly got lazy so there isn’t much data there. I would say DALL-E is much better in creating stylistic art but Midjourney is better at realism.

Something I’d be interested in is restricting the “Are you in computer science?” question to AI related fields, rather than the whole of CS, which is about as broad a field as social science. Neural networks are a tiny sliver of a tiny sliver

Especially depending on the nation or district a person lives in, where CS can have even broader implications like everything from IT Support to Engineering.

And this is why AI detector software is probably impossible.

Just about everything we make computers do is something we’re also capable of; slower, yes, and probably less accurately or with some other downside, but we can do it. We at least know how. We can’t program software or train neutral networks to do something that we have no idea how to do.

If this problem is ever solved, it’s probably going to require a whole new form of software engineering.

And this is why AI detector software is probably impossible.

What exactly is “this”?

Just about everything we make computers do is something we’re also capable of; slower, yes, and probably less accurately or with some other downside, but we can do it. We at least know how.

There are things computers can do better than humans, like memorizing, or precision (also both combined). For all the rest, while I agree in theory we could be on par, in practice it matters a lot that things happen in reality. There often is only a finite window to analyze and react and if you’re slower, it’s as good as if you knew nothing. Being good / being able to do something often means doing it in time.

We can’t program software or train neutral networks to do something that we have no idea how to do.

Machine learning does that. We don’t know how all these layers and neurons work, we could not build the network from scratch. We cannot engineer/build/create the correct weights, but we can approach them in training.

Also look at Generative Adversarial Networks (GANs). The adversarial part is literally to train a network to detect bad AI generated output, and tweak the generative part based on that error to produce better output, rinse and repeat. Note this by definition includes a (specific) AI detector software, it requires it to work.

What exactly is “this”?

The results of this survey showing that humans are no better than a coin flip.

while I agree in theory we could be on par, in practice it matters a lot that things happen in reality.

I didn’t say “on par.” I said we know how. I didn’t say we were capable, but we know how it would be done. With AI detection, we have no idea how it would be done.

Machine learning does that.

No it doesn’t. It speedruns the tedious parts of writing algorithms, but we still need to be able to compose the problem and tell the network what an acceptable solution would be.

Also look at Generative Adversarial Networks (GANs). […] this by definition includes a (specific) AI detector software, it requires it to work.

Several startups, existing tech giants, AI companies, and university research departments have tried. There are literally millions on the line. All they’ve managed to do is get students incorrectly suspended from school, misidentify the US Constitution as AI output, and get a network really good at identifying training data and absolutely useless at identifying real world data.

Note that I said that this is probably impossible, only because we’ve never done it before and the experiments undertaken so far by some of the most brilliant people in the world have yielded useless results. I could be wrong. But the evidence so far seems to indicate otherwise.

Right, thanks for the corrections.

In case of GAN, it’s stupidly simple why AI detection does not take off. It can only be half a cycle ahead (or behind), at any time.

Better AI detectors train better AI generators. So while technically for a brief moment in time the advantage exists, the gap is immediately closed again by the other side; they train in tandem.

This does not tell us anything about non-GAN though, I think. And most AI is not GAN, right?

True, at least currently. Image generators are mostly diffusion models, and LLMs are largely GPTs.

I don’t know… My computer can do crazy math like 13+64 and other impossible calculations like that.

Wow, I got a 12/20. I thought I would get less. I’m scared for the future of artists

Why? A lot of artists have adopted AI and use it as just another tool.

I’m sure artists can use it as another tool, but the problem comes when companies think they can get away with just using ai. Also, the ai has been trained using artwork without any artist permission

The training data containing non licensed artwork is an extremely short term problem.

Within even a few years that problem will literally be moot.

Huge data sets are being made right now explicitly to get around this problem. And ai trained on other AI to the point that original sources no longer are impactful enough to matter.

At a point the training data becomes so generic and intermixed that it’s indistinguishable from humans trained on other humans. At which point you no longer have any legal issues since if you deem it still unallowed at that point you have to ban art schools and art teachers functionally. Since ai learns the same way we do.

The true proplem is just that the training data is too narrow and very clearly copies large chunks from existing artists instead of copying techniques and styles like a human does. Which also is solvable. :/

Which is an issue if those artists want to copyright their work. So far the US has maintained that AI generated art is not subject to copyright protection.

Only if it’s 100% synth art. What about partial? We don’t know.

As with other AI-enhanced jobs, that probably still means less jobs in the long run.

Now one artist can make more art in the same time, or produce different styles which previously had required different artists.

Wow, what a result. Slight right skew but almost normally distributed around the exact expected value for pure guessing.

Assuming there were 10 examples in each class anyway.

It would be really cool to follow up by giving some sort of training on how to tell, if indeed such training exists, then retest to see if people get better.

I feel like the images selected were pretty vague. Like if you have a picture of a stick man and ask if a human or computer drew it. Some styles aew just hard to tell

You could count the fingers but then again my preschooler would have drawn anywhere from 4 to 40.

I don’t remember any of the images having fingers to be honest. Assuming this is that recent one, one sketch had the fingers obscured and a few were landscapes.

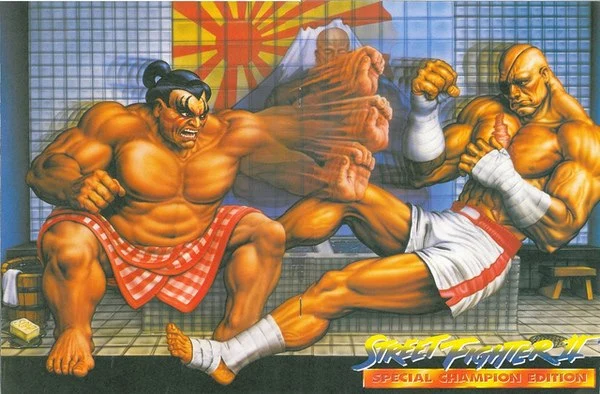

Imo, 3,17,18 were obviously AI imo (based on what I’ve seen from AI art generators in the past*). But whatever original art those are based on, I’d probably also flag as obviously AI. The rest I was basically guessing at random. Especially the sketches.

*I never used AI generators myself, but I’ve seen others do it on stream. Curious how many others like me are raising the average for the “people that haven’t used AI image generators” before.

I’d be curious about 18, what makes it obviously generated for you? Out of the ones not shown in the result, I got most right but not this one.

Either the lighting’s wrong or you somehow have a zebra cat

The arm rest seamlessly and senselessly blends into the rest of the couch.

Thank you. I’m not sure how I’ve missed that.

Seen a lot of very similar pictures generated via midjourney. Mostly goats fused with the couch.

I was legitimately surprised by the man on a bench being human-made. His ankle is so thin! The woman in a bar/restaurant also surprised me because of her tiny finger.

I got a 17/20, which is awesome!

I’m angry because I could’ve gotten an 18/20 if I’d paid attention to the thispersondoesnotexists’ glasses, which in hindsight, are clearly all messed up.

I did guess that one human-created image was made by AI, “The End of the Journey”. I guessed that way because the horses had unspecific legs and no tails. And also, the back door of the cart they were pulling also looked funky. The sky looked weirdly detailed near the top of the image, and suddenly less detailed near the middle. And it had birds at the very corner of the image, which was weird. I did notice the cart has a step-up stool thing attached to the door, which is something an AI likely wouldn’t include. But I was unsure of that. In the end, I chose wrong.

It seems the best strategy really is to look at the image and ask two questions:

- what intricate details of this image are weird or strange?

- does this image have ideas indicate thought was put into them?

About the second bullet point, it was immediately clear to me the strawberry cat thing was human-made, because the waffle cone it was sitting in was shaped like a fish. That’s not really something an AI would understand is clever.

One the tomato and avocado one, the avocado was missing an eyebrow. And one of the leaves of the stem of the tomato didn’t connect correctly to the rest. Plus their shadows were identical and did not match the shadows they would’ve made had a human drawn them. If a human did the shadows, it would either be 2 perfect simplified circles, or include the avocado’s arm. The AI included the feet but not the arm. It was odd.

The anime sword guy’s armor suddenly diverged in style when compared to the left and right of the sword. It’s especially apparent in his skirt and the shoulder pads.

The sketch of the girl sitting on the bench also had a mistake: one of the back legs of the bench didn’t make sense. Her shoes were also very indistinct.

I’ve not had a lot of practice staring at AI images, so this result is cool!

does this image have ideas indicate thought was put into them?

I got fooled by the bright mountain one. I assumed it was just generic art vomit a la Kinkade

About the second bullet point, it was immediately clear to me the strawberry cat thing was human-made, because the waffle cone it was sitting in was shaped like a fish. That’s not really something an AI would understand is clever.

It’s a Taiyaki cone, something that already exists. Wouldn’t be too hard to get AI to replicate it, probably.

I personally thought the stuff hanging on the side was oddly placed and got fooled by it.

Yes, "the end of the journey’ got me too. So AI-looking. It’s this one:

https://www.deviantart.com/davidevart/art/The-End-Of-The-Journey-952592148

Having used stable diffusion quite a bit, I suspect the data set here is using only the most difficult to distinguish photos. Most results are nowhere near as convincing as these. Notice the lack of hands. Still, this establishes that AI is capable of creating art that most people can’t tell apart from human made art, albeit with some trial and error and a lot of duds.

These images were fun, but we can’t draw any conclusions from it. They were clearly chosen to be hard to distinguish. It’s like picking 20 images of androgynous looking people and then asking everyone to identify them as women or men. The fact that success rate will be near 50% says nothing about the general skill of identifying gender.

I have it on very good authority from some very confident people that all ai art is garbage and easy to identify. So this is an excellent dataset to validate my priors.

Curious which man made image was most likely to be classified as ai generated

and

About 20% got those correct as human-made.

That’s the same image twice?

Thanks. Fixed.

My first impression was “AI” when I saw them, but I figured an AI would have put buildings on the road in the town and the 2nd one was weird but that parts fit together well enough.

I think it’s because of the shadow on the ground being impossible

I guessed it to be ai generated, because

- the horses are very artsy, but undefined

- the sky is easy to generate for ai

- the landscape as well. There is simply not a lot the ai can do wrong to make a landscape clearly fake looking

- undefined city from afar is like the one thing ai is seriously good at.

Yeah, but the bridge is correctly over the river and the buildings aren’t really merged. Tough though.

The second one got me tho

Sketches are especially hard to tell apart because even humans put in extra lines and add embellishments here and there. I’m not surprised more than 70% of participants weren’t able to tell that one was generated.

Thank you so much for sharing the results. Very interesting to see the outcome after participating in the survey.

Out of interest: do you know how many participants came from Lemmy compared to other platforms?

No idea, but I would assume most results are from here since Lemmy is where I got the most attention and feedback.

I’m found the survey through tumblr, i expect there’s quite a few from there.

Oh nice, do you have a link for where it was posted?

i’ll see if i can find it, but my dashboard hits 99+ notification in about six hours…

I guess I should feel good with my 12/20 since it’s better than average.

12/20 is not a good result. There’s a 25% chance of getting the same score (or better) by just guessing. The comments section is a good place for all the lucky guessers (one out of 4 test takers) to congregate.

It’d be interesting to also see the two non-AI images the most people thought were.

I got 11/20 and there were a couple of guesses in there that I got right and wrong. Funny how there are some man-made ones that seem like AI. I think it’s the blurry/fuzziness maybe?