Yikes. There’s not a lot of imagination in combining two animals and making most of them blue. I’d expect better…even from AI.

“It felt like a singular embarrassment”

“AI-fueled debasement”We really need more of this type of scathing journalism and less of the phony type which is so widespread nowadays: hoping to curry favor and to gain inside scoops by sugar-coating even the worst of big corporations’ brainfarts.

Ya man. If it wasn’t a room full of sycophants, there would have been chuckles and groans. Like…you can literally see how lazy the AI prompts were: “combine two animals and make most of them blue”…also…I like Lemur faces…use those a bunch.

When you really think about it, one of the fundamental flaws with AI is that it literally cannot create anything new because it requires training data that already exists. The best it can realistically do is mix and match traits of other animals until it creates something “new.” If Star Wars was made today and entirely with AI it would not have the necessary training data to make anything even remotely close to what we got back in the 70s and would look like… This.

And this is a huge problem with pretty much all “AI” today. We say that they can “learn” and be “trained” but it’s basically just large and incoherent database that recycles the data in a way that makes sense to humans. It’s like if you were tasked with creating concept art for a movie or a game but your boss said you can’t start with a blank image and can only modify existing concept art. We already see copy/paste movies, games, and books all the time and we already don’t like it.

The concerning thing is that the tech bro executives in charge of the entertainment industry are full of this kind of nihilism where they truly believe nothing new needs to be made. They are these brain rot gen exers that think that the 80’s were the peak of creative achievement and that we can just keep remixing 6 tent pole franchises and 2 variations of the hero’s journey forever

The people in charge have no curiosity or imagination and their technologies are a reflection of their ideology.

“Two variations of the hero’s journey?”

What’s the second one?

Also, Dan Harmon would like a word about story circles.

I mean say what you will of the guy but he’s not even close to being the moan derivative writer I know. Hell, I’d even argue he has had a few original thoughts at one point or another.

But yeah, you hit the nail on the head with the comment. However the whole “nothing new under the sun” thing is literally thousands of years old, so it’s not like people haven’t been complaining about similar things for ages.

My favorite example of this is watching people try and generate AI images of Sharpedo, only to have them all have tails because the AI can’t comprehend a shark without a tail.

Can’t make a wine glass filled to the brim either because there are no pics of full wine glasses.

All creative works are built on top of works that came before them. AI is no different.

If you tell AI to generate an image of a fat, blue, lizardperson with a huge dick, wearing a fedora, holding way too many groceries… It’ll do that. And you just used it to make something original that (probably) never existed before.

It will have literally created something new. Thanks to the instructions of a creative human.

Saying AI is incapable of creating something new is like saying that programmers can’t create anything new because they’re just writing instructions for a computer to follow. AI is just the latest technology for doing that.

This is the creative nihilism i am talking about; no curiosity no credit to creatives, anti human automaton ghouls. If these are our so called trail blazers no wonder the world is going to shit.

Liche fother, liche sun

This line of thinking is a real misunderstanding of creativity. “Humans are only remixing things they’ve learned, so AI is the same”. It’s not the same. If an AI has nothing but images of apples in its training data, it will never ever be able to draw a banana. It seems creative and smart on the surface only because its trained on the (stolen) input of every bit of art, text, and code on the internet. But if humans stop creating new imaginative input, it will stagnate right where it is.

If someone has never seen a banana they wouldn’t be able to draw it either.

Also, AIs aren’t stealing anything. When you steal something you have deprived the original owner of that thing. If anything, AIs are copying things but even that isn’t accurate.

When an image AI is trained, it reads though millions upon millions of images that live on the public Internet and for any given image it will increment a floating point value by like 0.01. That’s it. That’s all they do.

For some reason people have this idea in their heads that every AI-generated image can be traced back to some specific image that it somehow copied exactly then modified slightly and combined together for a final output. That’s not how the tech works at all.

You can steal a car. You can’t steal an image.

“If someone hasn’t seen a banana they wouldn’t be able to draw it either.”

Hard disagree. If you gave said person a prompt about what a banana looks like they could draw one.

No matter how much prompting you do, an ai that hasn’t seen a banana cannot make one.

Hard disagree. You just have to describe the shape and colors of the banana and maybe give it some dimensions. Here’s an example:

A hyper-realistic studio photograph of a single, elongated organic object resting on a wooden surface. The object is curved into a gentle crescent arc and features a smooth, waxy, vibrant yellow skin. It has distinct longitudinal ridges running its length, giving it a soft-edged pentagonal cross-section. The bottom end tapers to a small, dark, organic nub, while the top end extends into a thick, fibrous, greenish-brown stalk that appears to have been cut from a larger cluster. The yellow surface has minute brown speckles indicating ripeness.It’s a lot of description but you’ve got 4096 tokens to play with so why not?

Remember: AI is just a method for giving instructions to a computer. If you give it enough details, it can do the thing at least some of the time (also remember that at the heart of every gen AI model is a RNG).

Note: That was the first try and I didn’t even use the word “banana”.

That’s also not a banana. It’s some type of gourde.

It’s close enough. The key is that it’s not something that was just regurgitated based on a single keyword. It’s unique.

I could’ve generated hundreds and I bet a few would look a lot more like a banana.

Totally disagree. I’ve seen original sources reproduced that show exactly what an AI copied to make images.

And humans can definitely create things that have never been seen before. An AI could never have invented general relativity having only been trained on Newtonian physics. But Einstein did.

I’ve seen original sources reproduced that show exactly what an AI copied to make images.

Show me. I’d honestly like to see it because it means that something very, very strange is taking place within the model that could be a vulnerability (I work insecurity).

The closest thing to that I’ve seen is false watermarks: If the model was trained on a lot of similar images with watermarks (e.g. all images of a particular kind of fungus might have come from a handful of images that were all watermarked), the output will often have a nonsense watermark that sort of resembles the original one. This usually only happens with super specific things like when you put the latin name of a plant or tree in your prompt.

Another thing that can commonly happen is hallucinated signatures: On any given image that’s supposed to look like a painting/drawing, image models will sometimes put a signature-looking thing in the lower right corner (because that’s where most artist signatures are placed).

The reason why this happens isn’t because the image was directly copied from someone’s work, it’s because there’s a statistical chance that the model (when trained) associated the keywords in your prompt with some images that had such signatures. The training of models is getting better at preventing this from happening though, as they apply better bounding box filtering to the images as a pretraining step. E.g. a public domain Audibon drawing of a pelican would only use the bird itself and not the entire image (which would include the artist signature somewhere).

The reason why the signature should not be included is because the resulting image would not be drawn by that artist. That would be tantamount to fraud (bad). Instead, what image models do (except OpenAI with ChatGPT/DALL-E) is tell the public exactly what their images were trained on. For example, they’ll usually disclose that they used ImageNET (which you yourself can download here: https://www.image-net.org/download.php ).

Note: I’m pretty sure the full ImageNET database is also on Huggingface somewhere if you don’t want to create an account with them.

Also note: ImageNET doesn’t actually contain images! It’s just a database of image metadata that includes bounding boxes. Volunteers—for over a decade—spent a lot of time drawing bounding boxes with labels/descriptions on public images that are available for anyone to download for free (with open licenses!). This means that if you want to train a model with ImageNET, you have to walk the database and download all the image URLs it contains.

If anything was “stolen”, it was the time of those volunteers that created the classification system/DB in order for things like OpenCV to work so that your doorbell/security camera can tell the difference between a human and a cat.

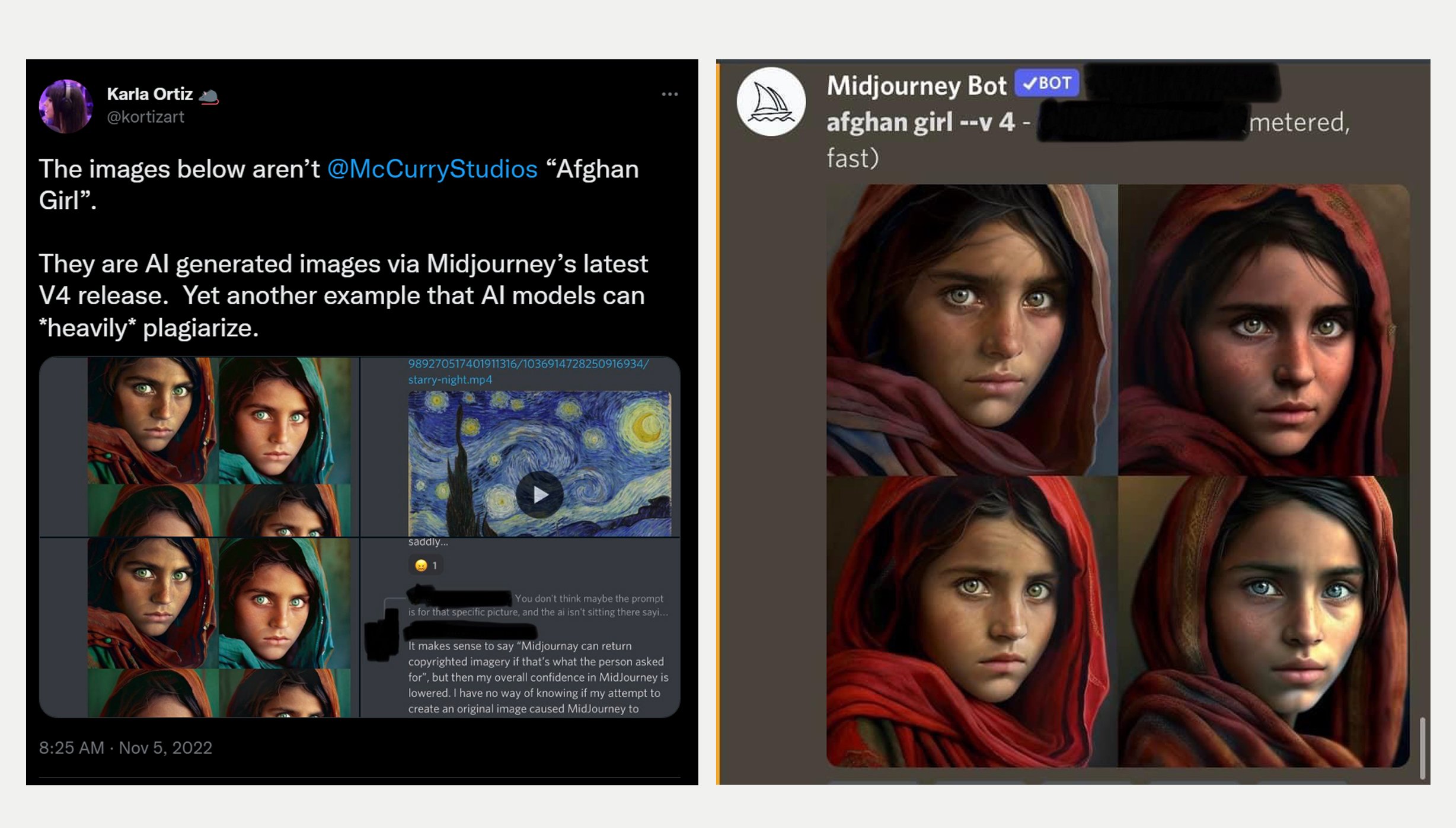

Examples like this (first one I found just now):

More reading

https://www.kortizblog.com/blog/why-ai-models-are-not-inspired-like-humansWhat that Afghanistan girl image demonstrates is simply a lack of diversity in Midjourney’s training data. They probably only had a single image categorized as “Afghanistan girl”. So the prompt ended up with an extreme bias towards that particular set of training values.

Having said that, Midjourney’s model is entirely proprietary so I don’t know if it works the same way as other image models.

It’s all about statistics. For example, there were so many quotes and literal copies of the first Harry Potter book in OpenAI’s training set that you could get ChatGPT to spit out something like 70% of the book with a lot of very, very specific prompts.

At the heart of every AI is a random number generator. If you ask it to generate an image of an Afghan girl—and it was only ever trained on a single image—it’s going to output something similar to that one image every single time.

On the other hand, if it had thousands of images of Afghan girls you’d get more varied and original results.

For reference, finding flaws in training data like that “Afghanistan girl” is one of the methods security researchers use to break large language models.

Flaws like this are easy to fix once they’re found. So it’s likely that over time, image models will improve and we’ll see fewer issues like this.

The “creativity” isn’t in the AI model itself, it’s in its use.

Not exactly. As long as the description is understood by AI, it can be generated and won’t be a mish-mash of other animals.

This just showcases the need for precise, and well understood prompt engineering - aka why you can’t just get rid of your designers and have overpaid managers prompt the AI because those chuckfuckles know fuck-all about the process, or creativity (same reason why software engineers won’t be replaced by managers either).

Now obviously, the term to describe what you want has to exist in common vernacular, and for visual AIs, has to have been referenced in the dataset, in some form or manner, for the AI to be able to output it. And ideally, actual designers, graphic artists in the props and costumes department would be using AI for quick visual prototyping, to confirm details, etc., within minutes of the discussion happening (instead of spending hours or days for initial sketches to be declined, refined, finalised), speeding up the process before the final version is then hand-made. This would be the ideal workflow, but some companies have simply grown too big for their own good - like Disney, the leadership is so detached from the actual workforce delivering their products that meddling middle-managers who only care about immediate quarterly and annual costs and incomes, can easily convince said leaders to “help” by getting rid of the expensive people, “replace” them with AI and save tons of money while “delivering the same quality”. Except they’re now realising that it’s nowhere near the same quality, and their customers will actively reject the companies for doing this - but the middle managers don’t care, they delivered “growth” by saving a fuckton of money by firing people thus not having to pay their salaries, got their big fat bonuses for these actions and have already fucked off to the next company to ruin…

LLMs aren’t able understand things.

You’re exaggerating. Many things people come up with for movies are based on or similar to some things from older shows. You don’t have to make every single creature 100% unique and original. It would actually be strange if people wouldn’t be able to recognize anything on the screen. Look at Guardians of the Galaxy; you have a human, a green humanoid, a red humanoid, a tree-like humanoid and badger like humanoid. Not really levels of creativity beyond AI… Not to mention that the most popular movies today are all remakes and sequels.

The example from Disney was lazy and stupid, AI sux in general but “not being able to create anything new” is not really the main problem here.

I’ve seen this argument, and it’s inherently flawed and reductionist, and it’s from people who don’t understand how AI image generation and human minds work.

Ai turns images into static, then turns that into math, then takes the prompt and changes the math, then turns the new math back into static and the static into a picture. Basically.

Human brains not only choose what things to use inspiration from, but they also change those things, by choice, and since we don’t have perfect recall and everything is stored as what the brain thinks it ought to be, we misremember things, which then are absolutely new things.

Just because you built off of something else doesn’t mean it’s not new, and extrapolating the argument that nothing is new means you’d have to show how cavemen had EDM and cell phones.

Ai is just a trick, nothing more.

People here like to ignore the article and just start rambling about random things. If we’re talking about AI coming up with completely novel concepts, being creative in in some philosophical sense or being able to create beautiful art then of course you’re right. AI does not operate like people and is not able to replicate the way human creativity works.

But this article is about Disney using AI to generate SciFi animals to use in a background of a movie. You don’t need to be a creative genius and push the boundaries of art to do this. SciFi movies done by people don’t do this. They usually use caricatures of animals/people with recognizable but exaggerated features mixed in random ways. AI is perfectly capable of doing this. The video they used was terrible but it doesn’t mean AI couldn’t create a better example.

Basically, the article is not about achieving human level creativity in cinema so saying that AI can’t do it is besides the point.

Don’t change the subject. I’m not talking about the article, I’m criticizing your logic and take.

I was always taking about the article. Because it’s a post about an article. If someone is talking about something else than they changed the subject. If you want to talk about something else feel free to make a post about different article. I will read it and tell you what I think.

Naw dude, I shouldn’t have to make a whole post special just for you. You had a shit argument about AI and I said it was shit, and now you’re deflecting instead of responding. I’ll chalk that up as you knowing your take is shit and are too vain or cowardly to admit you were wrong.

ok

You’re exaggerating.

You’re misunderstanding. Op is not saying humans don’t interpolate. They are saying AI can’t extrapolate.

And I’m saying that in the context of creativity and using AI for movies it’s not that important. The short movie from the articles is not bad because AI can’t do any better. It’s bad because whoever used the AI tool did a bad job.

It can be both

I think your point is valid, but unrelated to previous responses.

That AI video was so embarrassing. No artist was involved in the creation of that. It was extremely amateur work.

Maybe you just lack the sophistication to truly enjoy an orange sloth, various animals but with stripes now, or the blue lion. Ever think about that?

It’s true, I absolutely do lack that ability. I also lack the ability to imagine alien animals that look almost exactly like earth animals. I’m so sorry.

No artist was involved because it was AI. Ai is not art.

I know. But an artist can look at an AI video and say “this looks like shit”. So they absolutely didn’t “give tools to artists” as mentioned in the video. They got some tired middle manager or intern to generate a bunch of clips and called it a day.

Wow, it really was bad.

So, their “alien planet” animals are less creative than what we saw in The Last Airbender, 20 years ago? Is that the flex they’re making here?

it’s Disney so who’s surprised here