- cross-posted to:

- technology@lemmy.world

- cross-posted to:

- technology@lemmy.world

TIL Tim Sweeny is into child porn. Not surprising tbh.

The fall of rome… The fall of the perverse…

If you can be effectively censored by the banning of a site flooded with CSAM, that’s very much your problem and nobody else’s.

Nothing made-up is CSAM. That is the entire point of the term “CSAM.”

It’s like calling a horror movie murder.

It’s too hard to tell real CSAM from AI-generated CSAM. Safest to treat it all as CSAM.

I get this and I don’t disagree, but I also hate that AI fully brought back thought crimes as a thing.

I don’t have a better approach or idea, but I really don’t like that simply drawing a certain arrangement of lines and colors is now a crime. I’ve also seen a lot of positive sentiment at applying this to other forms of porn as well, ones less universally hated.

Not supporting this use case at all and on balance I think this is the best option we have, but I do think thought crimes as a concept are just as concerning, especially given the current political climate.

Sure, i think it’s weird to really care about loli or furry or any other niche the way a lot of people do around here, but ai generating material of actual children (and unwilling people besides) is actually harmful. If they can’t have effective safeguards against that harm it makes sense to restrict it legally.

Making porn of actual people without their consent regardless of age is not a thought crime. For children, that’s obviously fucked up. For adults it’s directly impacting their reputation. It’s not a victimless crime.

But generating images of adults that don’t exist? Or even clearly drawn images that aren’t even realistic? I’ve seen a lot of people (from both sides of the political spectrum) advocate that these should be illegal if the content is what they consider icky.

Like let’s take bestiality for example. Obviously gross and definitely illegal in real life. But should a cartoon drawing of the act really be illegal? No one was abused. No reputation was damaged. No illegal act took place. It was simply someone’s fucked up fantasy. Yet lots of people want to make that into a thought crime.

I’ve always thought that if there isn’t speech out there that makes you feel icky or gross then you don’t really have free speech at all. The way you keep free speech as a right necessarily requires you to sometimes fight for the right of others to say or draw or write stuff that you vehemently disagree with, but recognize as not actually causing harm to a real person.

Drawings are one conversation I won’t get into.

GenAI is vastly different though. Those are known to sometimes regurgitate people or things from their dataset, (mostly) unaltered. Like how you can get Copilot to spit out valid secrets that people accidentally committed by typing

NPM_KEY=. You can’t have any guarantee that if you ask it to generate a picture of a person, that person does not actually exist.Totally fair stance to take. I’m 100% on board with extra restrictions and scrutiny over anything that is photo realistic.

To me, those aren’t necessarily victimless crimes, even if the person doesn’t actually exist, because they poison the well with realistic looking fakes. That is actively harmful to others, so is not a victimless crime. Instead it becomes just another form of misinformation.

Making porn of actual people without their consent regardless of age is not a thought crime. For children, that’s obviously fucked up. For adults it’s directly impacting their reputation. It’s not a victimless crime.

That is also drawing a certain arrangement of lines and colours, and an example of “free speech” that you don’t think should be absolute.

Yes sorry. My original statement was too vague. I was talking specifically about scenarios where there is no victim and the action was just a drawing/story/etc.

I’m not a free speech absolutist. I think that lacks nuance. There are valid reasons to restrict certain forms of speech. But I do think the concept is core to a healthy democracy and society and should be fiercely protected.

I really don’t like that simply drawing a certain arrangement of lines and colors is now a crime

I’m sorry to break it to you, but this has been illegal for a long time and it doesn’t need to have anything to do with CSAM.

For instance, drawing certain copyrighted material in certain contexts can be illegal.

To go even further, numbers and maths can be illegal in the right circumstances. For instance, it may be illegal where you live to break the encryption of a certain file, depending on the file and encryption in question (e.g. DRM on copyrighted material). “Breaking the encryption of a file” essentially translates to “doing maths on a number” when you boil it down. That’s how you can end up with the concept of illegal numbers.

To further clarify it’s specifically around thought crimes in scenarios where there is no victim being harmed.

If I’m distributing copyrighted content, that’s harming the copyright holder.

I don’t actually agree with breaking DRM being illegal either, but at least in that case, doing so is supposedly harming the copyright holder because presumably you might then distribute it, or you didn’t purchase a second copy in the format you wanted or whatever. There’s a ‘victim’ that’s being harmed.

Doodling a dirty picture of a totally original character doing something obscene harms absolutely no one. No one was abused. No reputation (other than my own) was harmed. If I share that picture with other consenting adults in a safe fashion, again no one was harmed or had anything done to them that they didn’t agree to.

It’s totally ridiculous to outlaw that. It’s punishing someone for having a fantasy or thought that you don’t agree with and ruining their life. And that’s an extremely easy path to expand into other thoughts you don’t like as well. And then we’re back to stuff like sodomy laws and the like.

It was already a thing in several places. In my country it’s legal to sleep with a 16 year old, but fiction about the same thing is illegal.

You can insist every frame of Bart Simspon’s dick in The Simpsons Movie should be as illegal as photographic evidence of child rape, but that does not make them the same thing. The entire point of the term CSAM is that it’s the actual real evidence of child rape. It is nonsensical to use the term for any other purpose.

The *entire point* of the term CSAM is that it’s the actual real evidence of child rape.

You are completely wrong.

https://rainn.org/get-the-facts-about-csam-child-sexual-abuse-material/what-is-csam/

“CSAM (“see-sam”) refers to any visual content—photos, videos, livestreams, or AI-generated images—that shows a child being sexually abused or exploited.”

“Any content that sexualizes or exploits a child for the viewer’s benefit” <- AI goes here.

RAINN has completely lost the plot by conflating the explicit term for Literal Photographic Evidence Of An Event Where A Child Was Raped with made-up bullshit.

We will inevitably develop some other term like LPEOAEWACWR, and confused idiots will inevitably misuse that to refer to drawings, and it will be the exact same shit I’m complaining about right now.

Dude, you’re the only one who uses that strict definition. Go nuts with your course of prescriptivism but I’m pretty sure it’s a lost cause.

Child pornography (CP), also known as child sexual abuse material (CSAM) and by more informal terms such as kiddie porn, is erotic material that involves or depicts persons under the designated age of majority.

[…]

Laws regarding child pornography generally include sexual images involving prepubescents, pubescent, or post-pubescent minors and computer-generated images that appear to involve them.

(Emphasis mine)‘These several things are illegal, including the real thing and several made-up things.’

Please stop misusing the term that explicitly refers to the the real thing.

‘No.’

deleted by creator

The Rape, Abuse, & Incest National Network (RAINN) defines child sexual abuse material (CSAM) as “evidence of child sexual abuse” that "includes both real and synthetic content

Were you too busy fapping to read the article?

Is it a sexualized depiction of a minor? Then it’s csam. Fuck all y’all pedo apologists.

AI CSAM was generated from real CSAM

AI being able to accurately undress kids is a real issue in multiple ways

AI can draw Shrek on the moon.

Do you think it needed real images of that?

It used real images of shrek and the moon to do that. It didnt “invent” or “imagine” either.

The child porn it’s generating is based on literal child porn, if not itself just actual child porn.

You think these billion-dollar companies keep hyper-illegal images around, just to train their hideously expensive models to do the things they do not want those models to do?

Like combining unrelated concepts isn’t the whole fucking point?

No, I think these billion dollar companies are incredibly sloppy about curating the content they steal to train their systems on.

True enough - but fortunately, there’s approximately zero such images readily-available on public websites, for obvious reasons. There certainly is not some well-labeled training set on par with all the images of Shrek.

Yes and they’ve been proven to do so. Meta (Facebook) recently made the news for pirating a bunch of ebooks to train its AI.

Anna’s Archive, a site associated with training AI, recently scraped some 99.9% of Spotify songs. They say at some point they will make torrents so the common people can download it, but for now they’re using it to teach AI to copy music. (Note: Spotify uses lower quality than other music currently available, so AA will offer nothing new if/when they ever do release these torrents.)

So, yes, that is exactly what they’re doing. They are training their models on all the data, not just all the legal data.

It’s big fucking news when those datasets contain, like, three JPEGs. Because even one such JPEG is an event where the FBI shows up and blasts the entire hard drive into shrapnel.

Y’all insisting there’s gotta be some clearly-labeled archive with a shitload of the most illegal images imaginable, in order for the robot that combines concepts to combine the concept of “child” and the concept of “naked,” are not taking yourselves seriously. You’re just shuffling cards to bolster a kneejerk feeling.

It literally can’t combine unrelated concepts though. Not too long ago there was the issue where one (Dall-E?) couldn’t make a picture of a full glass of wine because every glass of wine it had been trained on was half full, because that’s generally how we prefer to photograph wine. It has no concept of “full” the way actual intelligences do, so it couldn’t connect the dots. It had to be trained on actual full glasses of wine to gain the ability to produce them itself.

And you think it’s short on images of fully naked women?

Yet another CEO who’s super into child porn huh?

Maybe we are the only people that don’t f kids. Maybe this is "H’ “E” Double Hockey Sticks.

I’ll keep in mind Tim think child porn is just politics.

It is when one side of the political palette is “against” it but keeps supporting people who think CSAM is a-okay, while the other side finds it abhorrent regardless who’s pushing it.

I mean the capitalist are the ones calling the shots since the imperial core is no democracy. This is their battle we are their dildos.

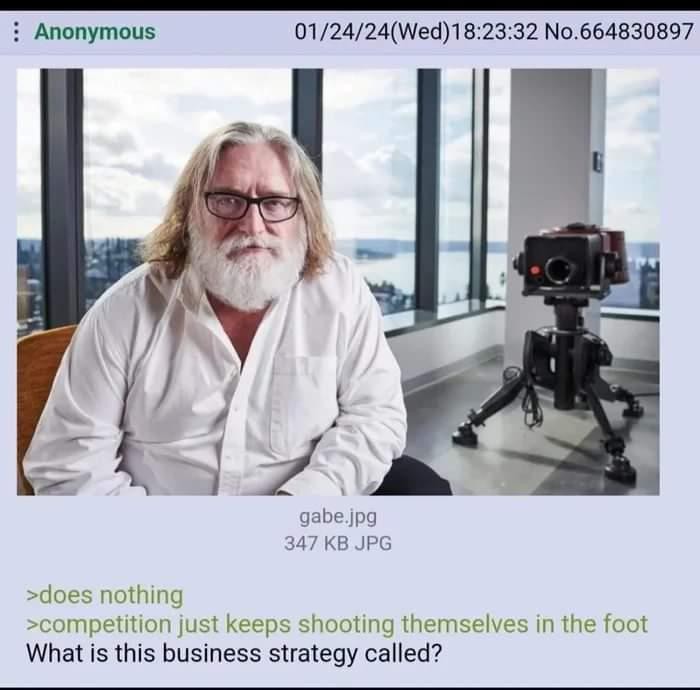

Literally this meme again

It helps that Tim Sweeney seems to always be wrong about everything.

If you wait by the river long enough, the bodies of your enemies will float by

I believe it’s called Let Them.

It’s called being so effective at marketing and spending so much money on it that people believe you don’t do nothing.

Somebody is in a certain set of files

His opinion is as trash as his gaming storefront that insists its a platform.

Someone beat this man for attempting to defent AI csam

I wonder which AI companies he’s invested in

inb4 “In a stunning 5-4 decision, the Supreme Court has ruled that AI-generated CSAM is constitutionally protected speech”

There is no such thing as generated CSAM, because the term exists specifically to distinguish anything made-up from photographic evidence of child rape. This term was already developed to stop people from lumping together Simpsons rule 34 with the kind of images you report to the FBI. Please do not make us choose yet another label, which you would also dilute.

Generating images of a minor can certainly fulfill the definition of CSAM. It’s a child, It’s sexual, It’s abusive, It’s material. It’s CSAM dude.

These are the images you report to the FBI. Your narrow definition is not the definition. We don’t need to make a separate term because it still impacts the minor even if it’s fake. I say this as a somewhat annoying prescriptivist pedant.

There cannot be material from the sexual abuse of a child if that sexual abuse did not fucking happen. The term does not mean ‘shit what looks like it could be from the abuse of some child I guess.’ It means, state’s evidence of actual crimes.

It is sexual abuse even by your definition if photos of real children get sexualised by AI and land on xitter. And afaik know that is what’s happened. These kids did not consent to have their likeness sexualised.

Nothing done to your likeness is a thing that happened to you.

Do you people not understand reality is different from fiction?

My likeness posted for the world to see in a way i did not consent to is a thing done to me

Your likeness depicted on the moon does not mean you went to the moon.

Please send me pictures of your mom so that I may draw her naked and post it on the internet.

Do you understand that’s a different thing than telling me you’ve fucked her?

Deepfakes are illegal. You’re defending deepfake cp now?

Threats are a crime, but they’re a different crime than the act itself.

Everyone piling on understands that it’s kinda fuckin’ important to distinguish this crime, specifically, because it’s the worst thing imaginable. They just also want to use the same word for shit that did not happen. Both things can be super fucking illegal - but they will never be the same thing.

CSAM is abusive material of a sexual nature of a child. Generated or real, both fit this definition.

CSAM is material… from the sexual abuse… of a child.

Fiction does not count.

You’re the only one using that definition. There is no stipulation that it’s from something that happened.

Where is your definition coming from?

My definition is from what words mean.

We need a term to specifically refer to actual photographs of actual child abuse. What the fuck are we supposed to call that, such that schmucks won’t use the same label to refer to drawings?

How do you think a child would feel after having a pornographic image generated of them and then published on the internet?

Looks like sexual abuse to me.

Dude, just stop jerking off to kids whether they’re cartoons or not.

‘If you care about child abuse please stop conflating it with cartoons.’

‘Pedo.’

Fuck off.

Someone needs to check your harddrive mate. You’re way, way too invested in splitting this particular hair.

The Rape, Abuse, & Incest National Network (RAINN) defines child sexual abuse material (CSAM) as “evidence of child sexual abuse” that "includes both real and synthetic content

Did Covid-19 make everyone lose their minds? This isn’t about corporate folks being cruel or egotistical. This is just a stupid thing to say. Has the world lost the concept of PR??? Genuinely defending 𝕏 in the year 2026… for Deepfake porn including of minors??? From the Fortnite company guy???

Unironically this behaviour is just “pivoting to a run for office as a Republican” vibes nowadays.

Its no longer even ‘weird behaviour’ for a US CEO.

For some reason Epic studios just let Tim Sweeney say the most insane things. If I was a shareholder I’d want someone to take his phone off him.

They could learn a lesson from Tesla.

Trump has shown these oligarchs that they don’t have to pretend to not be arrogant oligarchs anymore. They can speak their minds without suffering any kind of repercussion or censure for their insane narcissistic greed.

Did Covid-19 make everyone lose their minds?

Every day further convinces me we all died of COVID, and this is The Bad Place.

Imagine where Epic would be if they had just censored Tim Sweeney’s Twitter account.

It’s like he’s hell bent on driving people away from Epic. I’m not sure I could be more abrasive if I tried, without losing the plausible deniability of not trying to troll.

Not just asshole. Nonce asshole.

steam

does nothing

wins

Tim Sweeney vocally supports child porn and deep fake porn? He certainly looks like the type of creeper, so I guess I’m not that surprised.

I wonder how many times he’s been to Trump and Epstein’s Pedophile Island 🤔

IMO commenters here discussing the definition of CSAM are missing the point. Definitions are working tools; it’s fine to change them as you need. The real thing to talk about is the presence or absence of a victim.

Non-consensual porn victimises the person being depicted, because it violates the person’s rights over their own body — including its image. Plus it’s ripe material for harassment.

This is still true if the porn in question is machine-generated, and the sexual acts being depicted did not happen. Like the sort of thing Grok is able to generate. This is what Timothy Sweeney (as usual, completely detached from reality) is missing.

And it applies to children and adults. The only difference is that adults can still consent to have their image shared as porn; children cannot. As such, porn depicting children will be always non-consensual, thus always victimising the children in question.

Now, someone else mentioned Bart’s dick appears in the Simpsons movie. The key difference is that Bart is not a child, it is not even a person to begin with, it is a fictional character. There’s no victim.

EDIT: I’m going to abridge what I said above, in a way that even my dog would understand:

What Grok is doing is harmful, there are victims of that, regardless of some “ackshyually this is not CSAM lol lmao”. And yet you guys keep babbling about definitions?

Everything else I said here was contextualising and detailing the above.

Is this clear now? Or will I get yet another lying piece of shit (like @Atomic@sh.itjust.works) going out of their way to misinterpret what I said?

(I don’t even have a dog.)

What exactly have I lied about?

I’ve never once tried to even insinuate that what grok is doing ok. Nor that it should be. What I’ve said. Is that it doesn’t even matter if there are an actual real person being victimized or not. It’s still illegal. No matter how you look at it. It’s illegal. Fictional or not.

Your example of Bart in the Simpsons movie is so far out of place I hardly know where to begin.

It’s NOT because he’s fictional. Because fictional depictions of naked children in sexually compromised situations IS illegal.

Though I am glad you don’t have a dog. It would be real awkward for the dog to always be the smartest being in the house.

Supporting CSAM should be treated like making CSAM.

Down into the forgetting hole with them!

Nobody here is supporting CSAM. Learn to read, dammit.

He implicitly is.

EDIT: Wait, what is this about? Did I missphrase something?

They mistook your comment as disagreeing with their take on how there are real victims of Grok’s porn and CSAM and saying that they themselves were supporting CSAM, rather than saying that you agree and were saying Sweeney is supporting CSAM.

Gasp “Lvxferre! You damn Diddy demon! How could youuuu!”

At this rate I’m calling dibs on your nickname 🤣

Fuck! I misread you. Yes, you’re right, Tim Sweeney is supporting CSAM.

Sorry for the misunderstanding, undeserved crankiness, and defensiveness; I thought you were claiming I was the one doing it. That was my bad. (In my own defence, someone already did it.)

Now, giving you a proper answer: yeah, Epic is better sent down the forgetting hole. And I hope Sweeney gets haunted by his own words for years and years to come.

That is a lot of text for someone that couldn’t even be bothered to read the first paragraph of the article.

Grok has the ability to take photos of real people, including minors, and produce images of them undressed or in otherwise sexually compromising positions, flooding the site with such content.

There ARE victims, lots of them.

That is a lot of text for someone that couldn’t even be bothered to read a comment properly.

Non-consensual porn victimises the person being depicted

This is still true if the porn in question is machine-generated

The real thing to talk about is the presence or absence of a victim.

Which they then talk about and point out that victims are absolutely present in this case…

If this is still too hard to understand i will simplify the sentence. They are saying:

“The important thing to talk about is, whether there is a victim or not.”

It doesn’t matter if there’s a victim or not. It’s the depiction of CSA that is illegal.

So no, talking about whatever or not there’s a victim is not the most important part.

It doesn’t matter if you draw it by hand with crayons. If it’s depicting CSA it’s illegal.

Nobody was talking about the “legality”. We are talking about morals. And morally there is major difference.

I wish I was as composed as you. You’re still calmly explaining things to that dumb fuck, while they move the goalposts back and forth:

- first they lie I was saying there were no victims;

- then they backpedal and say “It doesn’t matter if there’s a victim or not. It’s the depiction of CSA that is illegal.”;

- then they backpedal again and say what boils down to “talking about morals bad! Also I’ll talk about MY morals. I don’t see moral difference when people are harmed and when they’re not” (inb4 I’m abridging it)

All of that while they’re still pretending to argue the same point. It reminds me a video from the Alt-Right Playbook, called “never play defence”: make dumb claim, waste someone else’s time expecting them to rebuke that dumb claim, make another dumb claim, waste their time again, so goes on.

Talking about morals and morality is how you end up getting things like abortion banned. Because some people felt morally superior and wanted to enforce their superior morality on everyone else.

There’s no point in bringing it up. If you need to bring up morals to argue your point. You’ve already failed.

But please do enlighten me. Because personally. I don’t think there’s a moral difference between depicting “victimless” CSAM and CSAM containing a real person.

I think they’re both, morally, equally awful.

But you said there’s a major moral difference? For you maybe.

That is a lot of text for someone that couldn’t even be bothered to read the first paragraph of the article.

Grok has the ability to take photos of real people, including minors, and produce images of them undressed or in otherwise sexually compromising positions, flooding the site with such content.

There ARE victims, lots of them.

You’re only rewording what I said in the third paragraph, while implying I said the opposite. And bullshitting/assuming/lying I didn’t read the text. (I did.)

Learn to read dammit. I’m saying this shit Grok is doing is harmful, and that people ITT arguing “is this CSAM?” are missing the bloody point.

Is this clear now?

Yes, it certainly comes across as you arguing for the opposite since you above, reiterated

The real thing to talk about is the presence or absence of a victim.

Which has never been an issue. It has never mattered in CSAM if it’s fictional or not. It’s the depiction that is illegal.

Yes, it certainly comes across as you arguing for the opposite

No, it does not. Stop being a liar.

Or, even better: do yourself a favour and go offline. Permanently. There’s already enough muppets like you: assumptive pieces of shit lacking basic reading comprehension, but still eager to screech at others — not because of what the others actually said, but because of what they assumed over it. You’re dead weight in any serious discussion, probably in some unserious ones too, and odds are you know it.

Also, I’m not wasting my time further with you, go be functionally illiterate elsewhere.

Ok. You’re right. You saying it’s ok to depict CSAM if there isn’t a victim is not you arguing the opposite. It’s me lying.

You’re so smart. Good job.

Is it so hard to admit that you misunderstood the comment ffs? It is painfully obvious to everyone.