I accidentally untarred archive intended to be extracted in root directory, which among others included some files for /etc directory.

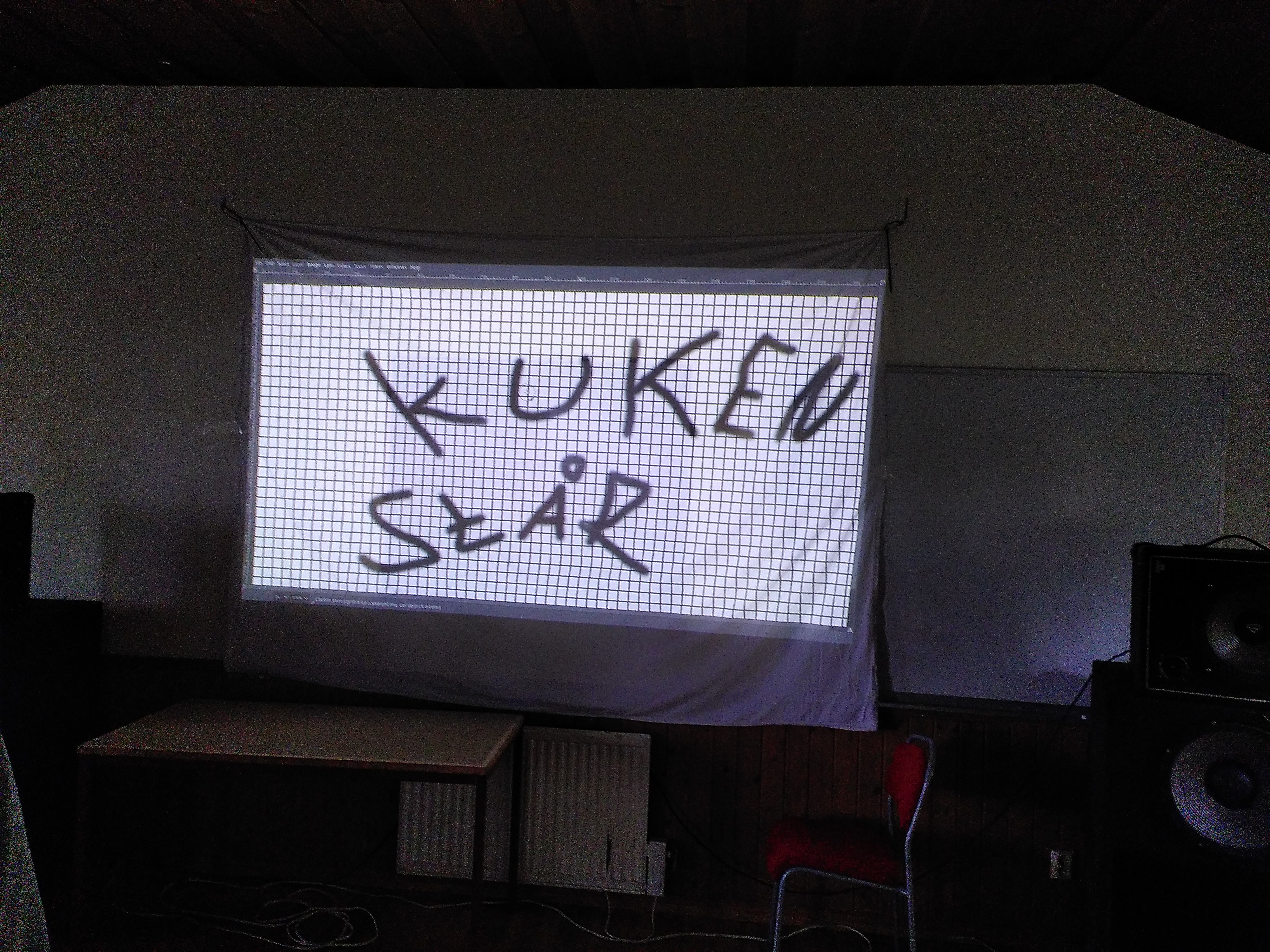

I went on to rm -rv ~/etc, but I quickly typed rm -rv /etc instead, and hit enter, while using a root account.

Ohohoho man did you ever fuck up. I did that once too. I can’t remember how I fixed it. I think I had to reinstall the whole OS

deleted by creator

OOOOOOOOOOOF!!

One trick I use, because I’m SUPER paranoid about this, is to mv things I intend to delete to /tmp, or make /tmp/trash or something.

That way, I can move it back if I have a “WHAT HAVE I DONE!?” moment, or it just deletes itself upon reboot.

This need’s to be higher in the comments!

Hey that’s a pretty good idea. I’m stealing that.

After being bitten by rm a few times, the impulse rises to alias the rm command so that it does an

“rm -i”or, better yet, to replace the rm command with a program that moves the files to be deleted to a special hidden directory, such as~/.deleted. These tricks lull innocent users into a false sense of security.

Be happy that you didn’t remeber the ~ and put a space between it and etc😃.

Ahh, the rites of passage!

So good to see that, even in 2026, Unix Haters’ Handbook’s part on rm is still valid. See page 59 of the pdf

The handbook has numbered pages, so why use “page X of the pdf”? I don’t see the page count in my mobile browser - you made me do math.

(I think it’s page number 22 btw, for anyone else wondering)

The handbook has numbered pages, so why use “page X of the pdf”?

Because the book’s page 1 is the pdf’s page 41, everything before is numbered with roman numerals :)

I also wasn’t expecting anyone to try and read with a browser or reader that doesn’t show the current page number

I dont know if you use firefox on your phone, but i do, and i fucking hate it that i cant jump to a page or see the page number im on.

That is what I’m using. I don’t really read enough pdf:s to notice it normally, but I guess it’s another reason to get off my ass about switching browsers ¯\_(ツ)_/¯

The biggest flaw with cars is when they crash. When I crash my car due to user error, because I made a small mistake, this proves that cars are dangerous. Some other vehicles like planes get around this by only allowing trusted users to do dangerous actions, why can’t cars be more like planes? /s

Always backup important data, always have the ability to restore your backups. If rm doesn’t get it, ransomware or a bad/old drive will.

A sysadmin deleting /bin is annoying, but it shouldn’t take them more than a few mins to get a fresh copy from a backup or a donor machine. Or to just be more careful instead.

Unix aficionados accept occasional file deletion as normal. For example, consider following excerpt from the comp.unix.questions FAQ:

6) How do I “undelete” a file?

Someday, you are going to accidentally type something like:

rm * .foo

and find you just deleted “*” instead of “*.foo”. Consider it a rite of passage.

Of course, any decent systems administrator should be doing regular backups. Check with your sysadmin to see if a recent backup copy of your file is available“A rite of passage”? In no other industry could a manufacturer take such a cavalier attitude toward a faulty product. “But your honor, the exploding gas tank was just a rite of passage.”

There’s a reason sane programs ask for confirmation for potentially dangerous commands

Things like these are right of passage on Linux :)

Reusing names of critical system directories in subdirectories in your home dir.

Oh, my! Perfect use of that scene. I don’t always lol, when I say lol. But I lol’ed at this for real.

I agree with this take, don’t wanna blame the victim but there’s a lesson to be learned.

except if you read the accompanying text they already stated the issue by accidentally unpacking an archive to their user directory that was intended for the root directory. that’s how they got an etc dir in their user directory in the first place

Could make one archive intended to be unpacked from /etc/ and one archive that’s intended to be unpacked from /home/Alice/ , that way they wouldn’t need to be root for the user bit, and there would never be an etc directory to delete. And if they run tar test (t) and pwd first, they could check the intended actions were correct before running the full tar. Some tools can be dangerous, so the user should be aware, and have safety measures.

they acquired a tar package from somewhere else. the instructions said to extract it to the root directory (because of its file structure). they accidentally extracted it to their home dir

that is how this happened. not anything like what you were saying

[OP] accidentally untarred archive intended to be extracted in root directory, which among others included some files for /etc directory.

I dunno, ~/bin is a fairly common thing in my experience, not that it ends up containing many actual binaries. (The system started it, miss, honest. A quarter of the things in my system’s /bin are text based.)

~/etc is seriously weird though. Never seen that before. On Debians, most of the user copies of things in /etc usually end up under ~/.local/ or at ~/.filenamehere

It should be ~/.local/bin

~/bin is the old-school location from before .local became a thing, and some of us have stuck to that ancient habit.

I think the home directory version of etc is ~/.config as per xdg.

I use ~/config/* to put directories named the same as system ones. I got used to it in BeOS and brought it to LFS when I finally accepted BeOS wasn’t doing what I needed anymore, kept doing it ever since.

So, you don’t do backups of /etc? Or parts of it?

I have those tars dir ssh, pam, and portage for Gentoo systems. Quickset way to set stuff up.

And before you start whining about ansible or puppet or what, I need those maybe 3-4 times a year to set up a temporary hardened system.

But may, just maybe, don’t assume everyone is a fucking moron or has no idea.

Edit Or just read what op did, I think that is pretty much the same

But may, just maybe, don’t assume everyone is a fucking moron or has no idea.

Well, OP didn’t say they used Arch, btw so it’s safe to assume.

(I hate that this needs a /s)

“Just a little off the top please”

Dumbfuck logged in as root.

alias rm=“rm -i”

alias rm=“echo no”

This is why you should setup daily snapshots of your system volumes.

Btrfs and ZFS exist for a reason.

Wish ZFS didn’t constantly cause my proxmox to need to be forcefully restarted after the ZFS pool crashed randomly.

I get months of uptime on a ZFS NAS, though I’m not using Proxmox. I don’t think it’s the filesystem’s fault, you might have some hardware issue tbh. Do you have some logs?

I just reformatted back to ext after messing with it for about a month, been totally fine since.

I do also assume it was something screwy with how it was handling my consumer m2

I am running a zfs raidz1-0 pool on 3 consumer nvme in my workstation, doing crazy stuff on it.

Ran zfs under proxmox with enterprise nvme and had the same issue.

It is proxmox, not zfs

That or make your system immutable

You can still break your /etc folder. But many other folders are safe.

Personally I do both.

Use Nix. And keep your system config in git.

That’s my current approach. Fedora Atomic, and let someone else break my OS instead of me.

Your first mistake was attempting to unarchive to / in the first place. Like WTF. Why would this EVER be a sane idea?

I don’t know if it should be a bad thing. Inside the tar archive the configs were already organized into their respective dirctories, this way with

--preserve-permissions --overwriteI could just quickly add the desired versions of configs.

Some examples of contents:-rw-r--r-- root/root 2201 2026-02-18 08:08 etc/pam.d/sshd -rw-r--r-- root/root 399 2026-02-17 23:22 etc/pam.d/sudo -rw-r--r-- root/root 2208 2026-02-18 09:13 etc/sysctl.conf drwx------ user/user 0 2026-02-17 23:28 home/user/.ssh/ -rw------- user/user 205 2026-02-17 23:29 home/user/.ssh/authorized_keys drwxrwxr-x user/user 0 2026-02-18 16:30 home/user/.vnc/ -rw-rw-r-- user/user 85 2026-02-18 15:32 home/user/.vnc/tigervnc.conf -rw-r--r-- root/root 3553 2026-02-18 08:04 etc/ssh/sshd_configKeeps permissions, keeps ownership, puts things where they belong (or copies from where they were), and you end up with a single file that can be stored on whatever filesystem.

I assumed something like this. That’s a perfectly valid usecase for a tar extracted to /.

But I love it how people always jump to the assumption that the one on the other end is the stupid one

that was my reaction when I saw a coworker put random files and directories into / of a server

I feel like some people don’t have a feeling about how a file system works

Its a pretty common Windows server practice to just throw random shit on the root directory of the server. I’m guilty of this at times when there isn’t a better option available to me, but I at least use a dedicated directory at the root for dumping random crap and organize the files within that directory (and delete unneeded files when done) so that it doesn’t create more work later.

Ahh, good old /opt/

What’s so bad about that? Except that is trigger me to not have it organized.

hard to properly set permissions and organize

Maybe they do and don’t fear the HFS? I mean do you use the HFS in a docker container?

HAH rookie, I once forgot the . before the ./

o.7

DId you try CRTL-Z?

instructions on clear, switched to vi mode in bash and cant exit

F

(That’s not going to help you, just paying my respects.)

I can’t type ctrl-z without reflexively typing bg after, so no joy there.